This is a live-blog from the Stanford Data on Purpose / Do Good Data “From Possibilities to Responsibilities” event. This is a summary of what the speakers at the talked about, captured by Rahul Bhargava and Catherine D’Ignazio. Any omissions or errors are likely my fault.

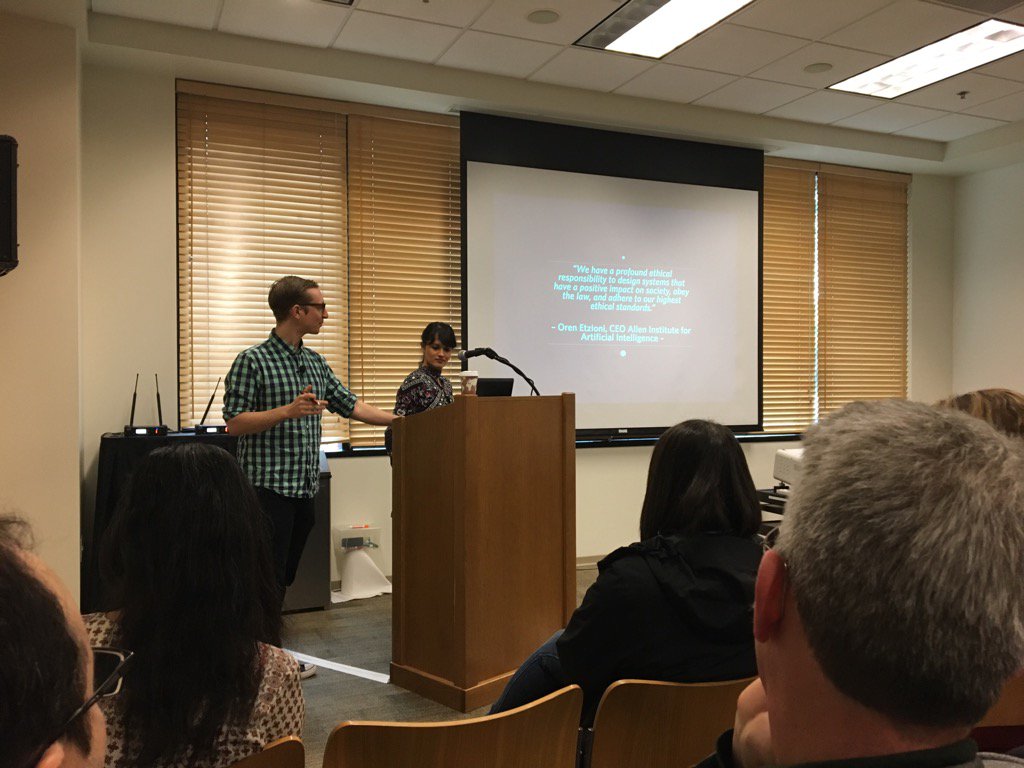

Human-Centered Data Science for Good: Creating Ethical Algorithms

Zara Rahman works at both Data & Society and the Engine Room, where she helps co-ordinate the Responsible Data Forum series of events. Jake Porway founded and runs DataKind.

Jake notes this is the buzzkill session about algorithms. He wants us all to walk away being able to critically assess algorithms.

How do Algorithms Touch our Lives?

They invite the audience to sketch out their interactions with digital technologies over the last 24 hours on a piece of paper. Stick figures and word totally ok. One participant drew a clock, noting happy and sad moments with little faces. Uber and AirBnb got happy faces next to them. Trying to connect to the internet in the venue got a sad face. Here’s my drawing.

Next they ask where people were influenced by algorithms. One participant shares the flood warning we all received on our phones. Another mentioned a bot in their Slack channel that queued up a task. Someone else mentions how news that happened yesterday filtered down to him; for instance Hans Rosling’s death made it to him via social channels much more quickly than via technology channels. Someone else mentioned how their heating had turned on automatically based on the temperature.

What is an Algorithm?

Jake shares that the wikipedia-esque definition is pretty boring. “A set of rules that precisely deinfes a sequence of operations”. These examples we just heard demonstrate the reality of this. These are automated and do things on their own, like Netflix’s recommendation algorithm. The goal is to break down how these operate, and figure out how to intervene in what drives these thinking machines. Zara reminds us that even if you see the source code, that doesn’t help really understand it. We usually just see the output.

Algorithms have some kind of goal they are trying to get to. It takes actions to get there. For Netflix, the algorithm is trying to get you to watch more movies; while the actions are about showing you movies you are likely to want to watch. It tries to show you movies you might like; there is no incentive to show you a movie that might challenge you.

Algorithms use data to inform their decisions. In Netflix, the data input is what you have watched before, and what other people have been watching. There is also a feedback loop, based on how it is doing. It needs some way to figure out it is doing a good thing – did you click the movie, how much of it did you watch, how many star did you give it. We can speculate about what those measurements are, but we have no way of knowing their metrics.

A participant asks about how Netflix is probably also nudging her towards content they have produced, since that is cheaper for them. The underlying business model can drive these algorithms. Zara responds that this idea that the algorithm operates “for your benefit” is very subjective. Jake notes that we can be very critical about their goal state.

Another participant notes that there are civic benefits; in how Facebook can influence how many people are voting.

The definition is tricky, notes someone else, because anything that runs automatically could be called an algorithm. Jake and Zara are focused in on data-driven algorithms. They use information about you and learning to correct themselves. The purest definition and how the word is used in media are very different. Data science, machine learning, artificial intelligence – these are all squishy terms that are evolving.

Critiquing Algorithms

They suggest looking at Twitter’s “Who to follow” feature. Participants break into small groups for 10 minutes to ask questions about this algorithm. Here are the questions and some responses that groups shared after chatting:

- What is the algorithm trying to get you to do?

- They want to grow their user base, and then shifted to growing ad dollars

- Showing global coverage, to show they are the network to be in

- People name some unintended consequences like political polarization

- What activities does it use to do that?

- What data drives these decisions?

- Can you pay for these positions? There could be an agreement based on what you are looking at and what Twitter recommends

- What data does it use to evaluate if it is successful?

- It can track your hovers, clicks, etc. both on the recommendation and adds later on

- If you don’t click to follow somewhere that could be just as much signal

- They might track the life of your relationship with this person (who you follow later because you followed their recommendation, etc)

- Who has the power to influence these answers?

A participant notes that there were lots of secondary outcomes, which affected other people’s products based on their data. Folks note that the API opens up possibilities for democratic use and use for social good. Others note that Twitter data is highly expensive and not accessible to non-profits. Jake notes problems with doing research with Twitter data obtained through strange and mutant methods. Another participant notes they talked about discovering books to read and other things via Twitter. These reinforced their world views. Zara notes that these algorithms reinforce the voices that we hear (by gender, etc). Jake notes that Filter Bubble argument, that these algorithms reinforce our views. Most of the features they bake in are positive ones, not negative.

But who has the power the change these things? Not just on twitter, but health-care recommendations, Google, etc. One participant notes that in human interactions they are honest and open, but online he lies constantly. He doesn’t trust the medium, so he feeds it garbage on purpose. This matches his experiences in impoverished communities, where destruction is a key/only power. Someone else notes that the user can take action.

A participant asks what the legal or ethical standards should be. Someone responds that in non-profits the regulation comes from self-regulation and collective pressure. Zara notes that Twitter is worth nothing without it’s users.

Conclusion

Jake notes that we didn’t talk about it directly, but the ethical issues come up in relation to all these questions. These systems aren’t neutral.